Since I am currently a graduate student, I was eligible to represent my school, and attend the Krannert Walmart Data Dive at Purdue this past weekend. It was a fun experience, and if given the chance, I would do it again. I learned a lot, and the experience was invaluable.

Here’s what I considered to be the top takeaways.

1. Your organization doesn’t need to buy data in order to come up with powerful and actionable insights that you can use.

The data we were given contained all kinds of info, internal and external, on every individual. With so little time with which to come up with something, my team couldn’t afford to bring in very much. We stuck to the basics, namely purchasing totals, RFM and CLV.

2. Keep it simple.

The simplest insights are going to be the most powerful. Especially when your audience are non-technical. Data mining is all about reducing the noise.

3. R is king. Coding is king.

The team that was adept in R went the farthest and the fastest. They also won. My team wrote sql and exported csv’s to use with SAS. You can probably imagine how that worked: back and forth between the two applications as we tried different approaches. An odbc connection in R would’ve been amazing. I’m going to get that all going on, very soon.

4. WalMart data is massive.

You wouldn’t believe it.

5. Presentation and being able to sell your ideas is just as important as the work you do.

This has been a hard lesson for me, as I’m an introvert and I have not ever enjoyed getting out in front of people very much. But if I am having good ideas, and I’m not ever selling them, what good are my ideas? As I’m realizing the folly of my ways, and getting out there, I’m finding that I don’t dread it as much, because I’m speaking about things I’m passionate about, anyway.

6. I have a lot more to learn.

There are always more approaches and more tools to find out about, and implement. Growth is happening at an exponential rate. We are living in an exciting time, and we’re in the most amazing field. Hearing about how 19 teams answered the same 2 questions in completely different, and yet valid ways, was pretty darn cool.

Data Science and Analytics Related to work in Non-Profits: Fundraising, Constituent Modeling, People Analytics.

Tuesday, April 5, 2016

Tuesday, February 23, 2016

Using Data Marts to Move From Reactivity to Proactivity

I'd like to share a few methods that have enabled me to move from being almost entirely reactive in my work (simply fulfilling requests), to being proactive, where I have the ability to generate deliverables that will inform decision-making and drive strategy.

Several years ago, when I first began to learn about predictive analytics, I started out by reading books. The concepts in these books seemed almost unattainable to me. My organization at that time had just become a client of a consulting firm. We were very excited about adopting these ideas and methods, and the first thing the consultant asked me to provide him with, was a list of variables, attached to a random sample of IDs from our database. It actually took me a couple of days to make that file. And after that, there was at least another day’s worth of effort of data prep involved, as the consultant and I recoded those variables into binary flags in SPSS, so that we could even begin to run a regression and develop some models.

Fast forward a few years. I found myself in a position where I was suddenly supporting dozens of different areas. I developed processes to help me provide deliverables in a reasonable amount of time. After I had done this, my work life became much easier. I realized I should have been establishing these kinds of shortcuts in the first place. This should have been the first step.

We need to be in a position to quickly answer the questions that our colleagues ask us about our data. We also need to be in a position to quickly fulfill our own curiosity. We can only do this if the data is in a format that is readily available.

This is something that doesn’t tend to be discussed in books I’ve read about statistics or analytics. But it’s absolutely critical to be able to act on ideas, and to answer the questions that are relevant to business needs.

ART AND SCIENCE

In discussing our work, we hear a lot about this dichotomy of the art and science of fundraising, because it applies, right?

The C suite embodies the art of fundraising. Executives, gift officers and administrators use their experience and knowledge to guide the process. Programmers, researchers, and data scientists reveal trends in the data, and their explorations are similar to those of a scientist, who runs tests to determine validity. These activities work together to (hopefully) form essential symbiosis in organizations.

CRAFT

We hear a lot about the art and science of fundraising, but I believe there is a third essential concept not often mentioned. And this is where the work of the business analyst comes in. A successful business analyst bridges the gap between art and science.

I think of the business analyst as embodying “craft.” There is a technical aspect to craft, as well as an artistic aspect to it. The artful aspect involves knowing what to communicate, and how. It also involves knowing about data, and how to transform it from raw data into workable data. I talk about these concepts or activities as different roles. But in many organizations, different roles can (and often will) be fulfilled by a single person.

DATA TEMPLATES

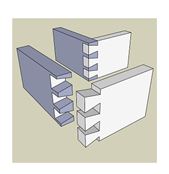

Not so long ago, I picked up a new hobby, doing woodworking. My goal was to build a guitar. I had never owned any woodworking tools before. I didn’t know how it was done. My image or idea of a woodworker, was that they would just measure and cut boards and fit them together. *Or*, that they would just carve on things until the wood had the shape that they wanted. I didn’t ever think this work was easy, I just imagined them going slowly in order to attain the result that they desired. I didn’t think there was much more to it, than that.

But once I got into it, and I began to learn more about it, I realized that woodworking craftsmen will use all kinds of templates, or shortcuts. Woodworkers call these things “jigs.” These templates will help make their work be precise as well as repeatable. It also reduces risk of failure, and it helps them to be very exact in a short amount of time. Sometimes they will execute many complicated steps of a process very quickly.

To a woodworker, the risk is to ruin their resources, the wood, which can be very expensive. In much the same way, we as analytics practitioners don’t have unlimited time or employees to spend working on our projects.

As time went by, and I began to meet more experienced guitar builders, one thing that I noticed, is that all of the good ones had unique and creative solutions to universal problems. They also made their own tools and templates. How fascinating this was to me! These homemade solutions always served as time-saving patterns to help or assist them in crafting a final product, a product which hopefully:

(a) will produce minimal waste,

(b) is executed in a timely manner and

(c) has a slim, or no chance of failure. Because no one can afford to fail!

Just like an experienced woodworking craftsman, I sought to leverage my effectiveness by being nimble with data. It should not take as long (or longer) than a half a day to make a table, before we can even get started. We need to have the ability to fulfill our own (as well as others’) curiosity very quickly.

So I began to adopt the same approach in my work as a business analyst

Data templates can help us move from reactivity to proactivity.

BUILDING A DATA MART

I realize that the idea of building a data mart, which we will use as a basis for most of our analytics work, is not a new one, and you’ve most likely heard of it before. A transactional database is full of individual records, not summary data. A data warehouse contains multiple subject areas and can feed dimensional models.

A data mart serves one particular subject area. What I am proposing is that we put a lot of thought into a data mart. Build it so that you can run descriptives and summarize your entire population of people with it, or any segment within. Build it so that it contains all the independent variables you will ever need to build a predictive model. Put key metrics in it, which are central to your organization or business. If your VP asks for a statistic that you can’t provide quickly, add it to the data mart. The data mart should be your life line.

DATA MART EXAMPLE

Here’s an image that shows a few records from an example analytics data mart. This segment has been opened in excel. I don’t recommend housing a data mart in excel. Purely for the sake of example, I’ve given you a link here to an example excel file to download, containing sample data, just so that you can see. Your data mart should be query-able, which is something that excel will not do. In a smaller organization it will be perfectly fine to house your data mart in a tool like MS Access.

In this data mart, I’ve populated it with as many relevant binary flags or variables as I could possibly fill it with. If I’m given a list of people that anyone is curious about, I can add up these flags and produce descriptive statistics very quickly. For example, maybe 100 people went to your event. If you have the IDs of these people, I could then give you the percentage of alumni, current donors, lapsed donors, average age, and other summarized information about this group of people in a few minutes. I could also tell you much more about them. This is not revolutionary, but the point is, that I want to be nimble with data and I want to be able to provide quick response to curiosity. This solution enables that. I also would like for you to notice how I’ve used full words in my data. When I pivot on these values, my query results will be presentable, and will also have titles that don’t look like abbreviations or have unsightly underscores or computer-y characters in them.

CALCULATED VARIABLES

I’ve also put calculated or combined variables in this data mart. These are fields that I’ve had to do some creative coding with, in order to come up with a piece of information that isn’t simply extracted from a database or data warehouse, but is now easily extracted from the data mart. For example, Acquisition Age is intriguing to me and I intend to test it more. It is the age at which someone made their first gift.

Another kind of calculated variable to use, are those that quantify two or more related characteristics into a composite score, such as Affinity (or Engagement). Engagement could be calculated with a dependent variable that is collected by a survey of your staff, collecting the names of the most highly engaged individuals to your institution. You would then use this variable to train a model in order to reveal the relative engagement of your entire population. Another way to measure engagement would be to summarize key connection factors in your database and add them all up, like a score.

Another calculated variable is a simple yes/no, for whether or not an individual is an executive in a company or corporation. I have found this to be a very significant piece of information. Another is Donor Lifetime Value, the net amount you can expect someone to give during the course of their lifetime. We have all heard of RFM, or RFV (Recency, Frequency, Monetary Value), which is very important. Another powerful metric is one that measures recency in giving, where you would take the last 5 years giving total, and divide by the lifetime giving total. This would tell you very quickly what percentage of giving, any individual has made in the last 5 years.

And, of course, anything that can be quantified, can be segmented. Different strategies can be devised which effectively deal with each segment. And almost no work has to be done to do this segmentation, if the data mart is refreshed routinely, and the metrics are tracked.

FORECASTING

Another thing that a data mart can allow you to do more easily, is in the realm of forecasting. This is a great quick example to show how even very simple metrics can inform strategy. I purposely have flags for the last 5 full fiscal years so that I can forecast retention rates for the upcoming fiscal year.

A natural complement to this is a recency/frequency index which can be used to segment potential donors you want to target into more engaged, and less engaged populations.

My data mart flags are for overall giving. If I want to do a similar analysis that is more specific to a certain area of giving, I just use the query which produced the flags. I filter on any fund or allocation. With templates I have set up, I can then forecast for these more specific giving areas, very easily and quickly.

I hope that you will find some benefit to these ideas. I’ve put a lot of work in the last year or so, to reducing the pain of data preparation. I believe it has been worth it. The important thing is to not spend excessive time preparing data. I want my data preparation to be as painless as possible. I do this so that I can get to what really matters, the analysis and the insights that should follow.

Several years ago, when I first began to learn about predictive analytics, I started out by reading books. The concepts in these books seemed almost unattainable to me. My organization at that time had just become a client of a consulting firm. We were very excited about adopting these ideas and methods, and the first thing the consultant asked me to provide him with, was a list of variables, attached to a random sample of IDs from our database. It actually took me a couple of days to make that file. And after that, there was at least another day’s worth of effort of data prep involved, as the consultant and I recoded those variables into binary flags in SPSS, so that we could even begin to run a regression and develop some models.

Fast forward a few years. I found myself in a position where I was suddenly supporting dozens of different areas. I developed processes to help me provide deliverables in a reasonable amount of time. After I had done this, my work life became much easier. I realized I should have been establishing these kinds of shortcuts in the first place. This should have been the first step.

We need to be in a position to quickly answer the questions that our colleagues ask us about our data. We also need to be in a position to quickly fulfill our own curiosity. We can only do this if the data is in a format that is readily available.

This is something that doesn’t tend to be discussed in books I’ve read about statistics or analytics. But it’s absolutely critical to be able to act on ideas, and to answer the questions that are relevant to business needs.

ART AND SCIENCE

In discussing our work, we hear a lot about this dichotomy of the art and science of fundraising, because it applies, right?

The C suite embodies the art of fundraising. Executives, gift officers and administrators use their experience and knowledge to guide the process. Programmers, researchers, and data scientists reveal trends in the data, and their explorations are similar to those of a scientist, who runs tests to determine validity. These activities work together to (hopefully) form essential symbiosis in organizations.

CRAFT

We hear a lot about the art and science of fundraising, but I believe there is a third essential concept not often mentioned. And this is where the work of the business analyst comes in. A successful business analyst bridges the gap between art and science.

I think of the business analyst as embodying “craft.” There is a technical aspect to craft, as well as an artistic aspect to it. The artful aspect involves knowing what to communicate, and how. It also involves knowing about data, and how to transform it from raw data into workable data. I talk about these concepts or activities as different roles. But in many organizations, different roles can (and often will) be fulfilled by a single person.

DATA TEMPLATES

Not so long ago, I picked up a new hobby, doing woodworking. My goal was to build a guitar. I had never owned any woodworking tools before. I didn’t know how it was done. My image or idea of a woodworker, was that they would just measure and cut boards and fit them together. *Or*, that they would just carve on things until the wood had the shape that they wanted. I didn’t ever think this work was easy, I just imagined them going slowly in order to attain the result that they desired. I didn’t think there was much more to it, than that.

But once I got into it, and I began to learn more about it, I realized that woodworking craftsmen will use all kinds of templates, or shortcuts. Woodworkers call these things “jigs.” These templates will help make their work be precise as well as repeatable. It also reduces risk of failure, and it helps them to be very exact in a short amount of time. Sometimes they will execute many complicated steps of a process very quickly.

To a woodworker, the risk is to ruin their resources, the wood, which can be very expensive. In much the same way, we as analytics practitioners don’t have unlimited time or employees to spend working on our projects.

As time went by, and I began to meet more experienced guitar builders, one thing that I noticed, is that all of the good ones had unique and creative solutions to universal problems. They also made their own tools and templates. How fascinating this was to me! These homemade solutions always served as time-saving patterns to help or assist them in crafting a final product, a product which hopefully:

(a) will produce minimal waste,

(b) is executed in a timely manner and

(c) has a slim, or no chance of failure. Because no one can afford to fail!

Just like an experienced woodworking craftsman, I sought to leverage my effectiveness by being nimble with data. It should not take as long (or longer) than a half a day to make a table, before we can even get started. We need to have the ability to fulfill our own (as well as others’) curiosity very quickly.

So I began to adopt the same approach in my work as a business analyst

Data templates can help us move from reactivity to proactivity.

BUILDING A DATA MART

I realize that the idea of building a data mart, which we will use as a basis for most of our analytics work, is not a new one, and you’ve most likely heard of it before. A transactional database is full of individual records, not summary data. A data warehouse contains multiple subject areas and can feed dimensional models.

A data mart serves one particular subject area. What I am proposing is that we put a lot of thought into a data mart. Build it so that you can run descriptives and summarize your entire population of people with it, or any segment within. Build it so that it contains all the independent variables you will ever need to build a predictive model. Put key metrics in it, which are central to your organization or business. If your VP asks for a statistic that you can’t provide quickly, add it to the data mart. The data mart should be your life line.

DATA MART EXAMPLE

Here’s an image that shows a few records from an example analytics data mart. This segment has been opened in excel. I don’t recommend housing a data mart in excel. Purely for the sake of example, I’ve given you a link here to an example excel file to download, containing sample data, just so that you can see. Your data mart should be query-able, which is something that excel will not do. In a smaller organization it will be perfectly fine to house your data mart in a tool like MS Access.

In this data mart, I’ve populated it with as many relevant binary flags or variables as I could possibly fill it with. If I’m given a list of people that anyone is curious about, I can add up these flags and produce descriptive statistics very quickly. For example, maybe 100 people went to your event. If you have the IDs of these people, I could then give you the percentage of alumni, current donors, lapsed donors, average age, and other summarized information about this group of people in a few minutes. I could also tell you much more about them. This is not revolutionary, but the point is, that I want to be nimble with data and I want to be able to provide quick response to curiosity. This solution enables that. I also would like for you to notice how I’ve used full words in my data. When I pivot on these values, my query results will be presentable, and will also have titles that don’t look like abbreviations or have unsightly underscores or computer-y characters in them.

CALCULATED VARIABLES

I’ve also put calculated or combined variables in this data mart. These are fields that I’ve had to do some creative coding with, in order to come up with a piece of information that isn’t simply extracted from a database or data warehouse, but is now easily extracted from the data mart. For example, Acquisition Age is intriguing to me and I intend to test it more. It is the age at which someone made their first gift.

Another kind of calculated variable to use, are those that quantify two or more related characteristics into a composite score, such as Affinity (or Engagement). Engagement could be calculated with a dependent variable that is collected by a survey of your staff, collecting the names of the most highly engaged individuals to your institution. You would then use this variable to train a model in order to reveal the relative engagement of your entire population. Another way to measure engagement would be to summarize key connection factors in your database and add them all up, like a score.

Another calculated variable is a simple yes/no, for whether or not an individual is an executive in a company or corporation. I have found this to be a very significant piece of information. Another is Donor Lifetime Value, the net amount you can expect someone to give during the course of their lifetime. We have all heard of RFM, or RFV (Recency, Frequency, Monetary Value), which is very important. Another powerful metric is one that measures recency in giving, where you would take the last 5 years giving total, and divide by the lifetime giving total. This would tell you very quickly what percentage of giving, any individual has made in the last 5 years.

And, of course, anything that can be quantified, can be segmented. Different strategies can be devised which effectively deal with each segment. And almost no work has to be done to do this segmentation, if the data mart is refreshed routinely, and the metrics are tracked.

FORECASTING

Another thing that a data mart can allow you to do more easily, is in the realm of forecasting. This is a great quick example to show how even very simple metrics can inform strategy. I purposely have flags for the last 5 full fiscal years so that I can forecast retention rates for the upcoming fiscal year.

A natural complement to this is a recency/frequency index which can be used to segment potential donors you want to target into more engaged, and less engaged populations.

My data mart flags are for overall giving. If I want to do a similar analysis that is more specific to a certain area of giving, I just use the query which produced the flags. I filter on any fund or allocation. With templates I have set up, I can then forecast for these more specific giving areas, very easily and quickly.

I hope that you will find some benefit to these ideas. I’ve put a lot of work in the last year or so, to reducing the pain of data preparation. I believe it has been worth it. The important thing is to not spend excessive time preparing data. I want my data preparation to be as painless as possible. I do this so that I can get to what really matters, the analysis and the insights that should follow.

Wednesday, July 15, 2015

Quantifying Alumni Engagement

A great way to quantify Alumni engagement to a institution of higher ed, would be to assemble a list of all those perceived as the most highly

engaged people to the institution and assign them a flag that could be used as

a target variable: "highly engaged". Of course, it's subjective, but

training a model on this variable could be the best possible solution. Potentially more art than science. It would acknowledge the fact of giving≠engagement. It

would necessarily take a peer-review sort-of meeting to assemble this list,

which should be a very large one. Bonus: the participants would feel ownership

of the score and more immediately buy into it.

Tuesday, October 14, 2014

SQL to display duplicate rows within a table

Because you don't want duplicate rows.

This works for me in Oracle. YMMV. Just change "table_name" to the table you wish to query.

Happy deduping!

select * from

table_name -- change table name

where id_number in (SELECT id_number

FROM (SELECT ROW_NUMBER()

OVER(PARTITION BY id_number ORDER BY id_number asc) AS r,

f.* FROM (select * from

table_name -- change table name

) f) x WHERE x.r = 2)

order by id_number

This works for me in Oracle. YMMV. Just change "table_name" to the table you wish to query.

Happy deduping!

select * from

table_name -- change table name

where id_number in (SELECT id_number

FROM (SELECT ROW_NUMBER()

OVER(PARTITION BY id_number ORDER BY id_number asc) AS r,

f.* FROM (select * from

table_name -- change table name

) f) x WHERE x.r = 2)

order by id_number

Wednesday, April 2, 2014

Flagging Executives with SQL

Here's a bit of Oracle SQL code that will flag executives in your data, for use as a potentially very important independent variable in an analysis.

SELECT id_number,

report_name,

business_title,

'Y' as executive

FROM your_table

WHERE business_title like ('Part%')

or business_title like ('Prin%')

or business_title like ('Pres%')

or business_title like ('Owne%')

or business_title like ('Foun%')

or business_title like ('Vice%')

or business_title like ('Chie%')

or business_title like ('Chai%')

or business_title like ('VP%')

or business_title like ('V.P.%')

or business_title like ('C.E.%')

or business_title like ('COO%')

or business_title like ('CFO%')

or business_title like ('CIO%')

or business_title like ('CEO%')

ORDER BY id_number

SELECT id_number,

report_name,

business_title,

'Y' as executive

FROM your_table

WHERE business_title like ('Part%')

or business_title like ('Prin%')

or business_title like ('Pres%')

or business_title like ('Owne%')

or business_title like ('Foun%')

or business_title like ('Vice%')

or business_title like ('Chie%')

or business_title like ('Chai%')

or business_title like ('VP%')

or business_title like ('V.P.%')

or business_title like ('C.E.%')

or business_title like ('COO%')

or business_title like ('CFO%')

or business_title like ('CIO%')

or business_title like ('CEO%')

ORDER BY id_number

Wednesday, October 23, 2013

Useful Datasets for Prospect Research

FREE DATASETS

FEC Data

SIC Codes

Zip codes and Avg AGI with *some* metro areas included, available

through my google docs:

Zip code data that includes lattitude and longitude for each

Most expensive zip codes, according to Forbes. From my google docs.

Various demographic data by zip

(The source of this data is MS MapPoint. It was exported to csv from the

application. Also from my google docs.)

Census population data

Various stuff here:

FREE DATA IN UNFRIENDLY FORMATS

The poorest and wealthiest places in the United States

(and other links possibly of interest at top right of page)

Regional employment data

Zip codes

Saturday, December 10, 2011

Using Free Zip Code Data for Quick and Simple Analysis

As a one‐person researcher working at a smaller college, I became very interested in discovering cost‐effective ways in which I could quickly assess my prospect pool. One of the more effective and simple ways I have found, has been to append zip code data to any list, using MS Access.

If you have not used MS Access much or at all, I urge you to add this powerful tool to your arsenal. The research paradigm has already shifted, from one‐at‐a‐time profile creation, to top‐down assessment. This trend has caught on, because it works. Anything you can employ, in terms of data manipulation, is only going to make you more effective in your position. If you are doing prospect research in an organization that has a prospect pool with prospects that are dispersed among several states, zip code‐related data appends will serve you extremely well.

Using a zip code as a geographic region is not without its problems. Zips were not created with geography in mind: they are mail routes. But because zip codes already exist in our database, and because they will tend to always map together with other zips generally, the zip is a natural variable to rely on for most projects, lending itself very nicely to further analysis.

There are many ways I have found to use zip code data, but there are two that have been the most helpful to my work. The first is in mapping or listing individuals and organizations by metro area. The second is with IRS average adjusted gross income (AGI).

Databases are typically organized so that an ID attached to a record links to the same unique ID in

other tables. What we will be doing uses the same concept, but links the zip code in a list that you have exported from your database, to a saved spreadsheet on your network or PC. In order to link the data, your zip codes must be 5 digit zips, and must be in a text format in order to preserve leading zeroes.

Zip IRS AGI data and Zip metro area data are free. When I come across tables that will be of use to other researchers, I tend to save them in my google documents. I have various tables there, so feel free to look at them all or download all of them. The two tables I will be speaking about here, are in a subfolder, and are called:

Zips_and_IRS_Avg_AGI_2008.xlsx (1MB)

ZipAndMSA.xlsx (629KB)

The link to the subfolder is: http://tinyurl.com/zip‐agi

Metropolitan Statistical Areas (MSA) are higher population density areas. Not every zip code will have an associated MSA to go with it. Linking a Zip Code to a metropolitan area will enable you to group records in your database together into such areas.

The Internal Revenue Service publishes Average Adjusted Gross Income on its website. As of this

writing the most recent data is from 2008. There are limitations to the data. For prospect identification, I have found the use of this data to work very well. Linking two tables in Access is a very simple procedure, and will enable you to create a query using fields from those two tables. In MS Access, you will need to import a table containing information from your database. This table will need to contain basic biographical information to help you identify or qualify these individuals later on. I always like to include a system ID. A five digit zip code, in a text format will be essential.

Next you will need to import the two tables that I have linked to above, via tinyurl. (In Office 2010, External Data > Import > Excel).

Once all of these tables are in Access, you can create a query to append AGI or metro values to the individuals from your database. (In Office 2010, Create > Other > Query Design). Add your database table, then add the Avg AGI table to the query. Create a table join by clicking on the zip code field in your database table, and drag to the zip code field in the Avg AGI table.

Right‐click on the join itself, and choose "Join Properties". You will want to choose the join where all

the records from your table will be displayed, but only the records from the IRS data that match will be included.

Once your table join properties are set up, click on the fields you want to include in your results. Sort

descending by IRS Average AGI to reveal wealth potential. Automatic and instantaneous prioritization for any prospecting activity. I have used this method constantly and it has yielded great results. You can also combine it with other variables to come up with a composite score.

The simple procedure I've outlined in this article is very basic and is meant to serve as only an example of a way we can quickly assess a prospect pool. Starting with wealthy zip codes might be a good idea when doing prospect identification.

If you have not used MS Access much or at all, I urge you to add this powerful tool to your arsenal. The research paradigm has already shifted, from one‐at‐a‐time profile creation, to top‐down assessment. This trend has caught on, because it works. Anything you can employ, in terms of data manipulation, is only going to make you more effective in your position. If you are doing prospect research in an organization that has a prospect pool with prospects that are dispersed among several states, zip code‐related data appends will serve you extremely well.

Using a zip code as a geographic region is not without its problems. Zips were not created with geography in mind: they are mail routes. But because zip codes already exist in our database, and because they will tend to always map together with other zips generally, the zip is a natural variable to rely on for most projects, lending itself very nicely to further analysis.

There are many ways I have found to use zip code data, but there are two that have been the most helpful to my work. The first is in mapping or listing individuals and organizations by metro area. The second is with IRS average adjusted gross income (AGI).

Databases are typically organized so that an ID attached to a record links to the same unique ID in

other tables. What we will be doing uses the same concept, but links the zip code in a list that you have exported from your database, to a saved spreadsheet on your network or PC. In order to link the data, your zip codes must be 5 digit zips, and must be in a text format in order to preserve leading zeroes.

Zip IRS AGI data and Zip metro area data are free. When I come across tables that will be of use to other researchers, I tend to save them in my google documents. I have various tables there, so feel free to look at them all or download all of them. The two tables I will be speaking about here, are in a subfolder, and are called:

Zips_and_IRS_Avg_AGI_2008.xlsx (1MB)

ZipAndMSA.xlsx (629KB)

The link to the subfolder is: http://tinyurl.com/zip‐agi

Metropolitan Statistical Areas (MSA) are higher population density areas. Not every zip code will have an associated MSA to go with it. Linking a Zip Code to a metropolitan area will enable you to group records in your database together into such areas.

The Internal Revenue Service publishes Average Adjusted Gross Income on its website. As of this

writing the most recent data is from 2008. There are limitations to the data. For prospect identification, I have found the use of this data to work very well. Linking two tables in Access is a very simple procedure, and will enable you to create a query using fields from those two tables. In MS Access, you will need to import a table containing information from your database. This table will need to contain basic biographical information to help you identify or qualify these individuals later on. I always like to include a system ID. A five digit zip code, in a text format will be essential.

Next you will need to import the two tables that I have linked to above, via tinyurl. (In Office 2010, External Data > Import > Excel).

Once all of these tables are in Access, you can create a query to append AGI or metro values to the individuals from your database. (In Office 2010, Create > Other > Query Design). Add your database table, then add the Avg AGI table to the query. Create a table join by clicking on the zip code field in your database table, and drag to the zip code field in the Avg AGI table.

Right‐click on the join itself, and choose "Join Properties". You will want to choose the join where all

the records from your table will be displayed, but only the records from the IRS data that match will be included.

Once your table join properties are set up, click on the fields you want to include in your results. Sort

descending by IRS Average AGI to reveal wealth potential. Automatic and instantaneous prioritization for any prospecting activity. I have used this method constantly and it has yielded great results. You can also combine it with other variables to come up with a composite score.

The simple procedure I've outlined in this article is very basic and is meant to serve as only an example of a way we can quickly assess a prospect pool. Starting with wealthy zip codes might be a good idea when doing prospect identification.

Subscribe to:

Posts (Atom)